Deepfake technology, powered by artificial intelligence (AI), has become a growing concern in recent years. By using advanced machine learning techniques, deepfakes can create highly realistic images, videos, and audio recordings that can be difficult to distinguish from reality. While this technology has potential applications in entertainment and education, it also poses significant ethical, social, and political challenges.

What Are Deepfakes?

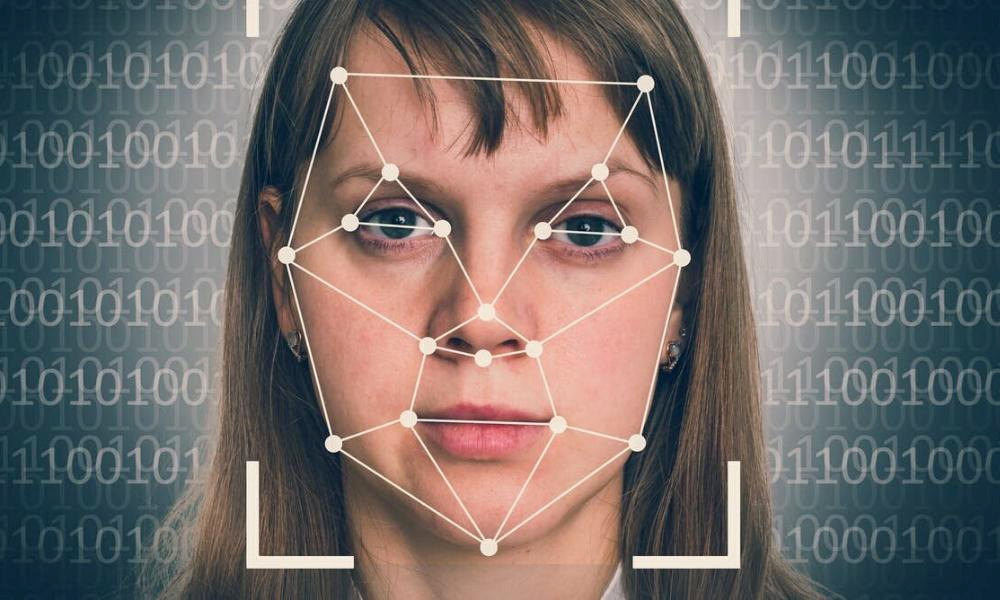

Deepfakes are synthetic media created using AI algorithms, particularly deep learning and neural networks. These models analyze vast amounts of existing data to generate highly realistic digital content. From altering facial expressions in videos to cloning voices, deepfake technology has the power to manipulate reality in ways never seen before.

The Positive Uses of Deepfake Technology

Despite its risks, deepfake technology has some beneficial applications:

- Entertainment Industry: Filmmakers use deepfakes to de-age actors, recreate historical figures, or enhance visual effects.

- Education and Training: AI-generated simulations help in medical training, historical reconstructions, and language learning.

- Accessibility: Deepfake voice technology assists people with disabilities by generating personalized speech models.

- Marketing and Advertising: Brands use AI-generated influencers and personalized content to engage customers.

The Risks and Ethical Concerns

While deepfake technology offers potential benefits, its misuse is becoming a global concern:

- Misinformation and Fake News

Deepfakes can be used to create false narratives, making it harder to differentiate between truth and lies. Fake videos of politicians or public figures can influence public opinion and disrupt democratic processes.

- Cybercrime and Fraud

Scammers use deepfake audio and video to impersonate people, leading to financial fraud and identity theft. Criminals have already used AI-generated voices to deceive businesses into transferring large sums of money.

- Privacy Violations and Harassment

Deepfake technology has been misused to create non-consensual explicit content, targeting celebrities and private individuals. This raises serious concerns about personal privacy and online safety.

- Trust In Media And Society

With deepfakes becoming more convincing, people may start questioning all digital content, leading to a decline in trust in news, journalism, and even legal evidence.

Combating the Deepfake Threat

Governments, tech companies, and researchers are working on solutions to detect and prevent deepfake misuse:

AI Detection Tools: Companies like Microsoft and Facebook are developing AI-based solutions to detect deepfakes.

Regulations and Laws: Several countries are introducing laws to criminalize deepfake misuse. India is also considering strict measures against AI-driven misinformation.

Public Awareness: Educating people about deepfake risks can help them verify information before trusting digital content.

The Future of Deepfake Technology

As AI continues to advance, deepfakes will become even more realistic and harder to detect. While they offer innovative applications, their potential for harm cannot be ignored. The challenge lies in balancing technological progress with ethical responsibility to ensure deepfake technology benefits society rather than threatens it.

As deepfake technology becomes more sophisticated, AI-driven detection tools are being developed to counteract its misuse. Tech companies are investing in machine learning algorithms that can analyze facial movements, pixel inconsistencies, and voice modulations to identify manipulated content. However, detection alone is not enough. Governments and social media platforms must implement stricter policies to prevent the spread of deceptive content while ensuring freedom of expression.

Equally important is media literacy. Educating the public on how to identify deepfakes can reduce the impact of misinformation. Fact-checking organizations and independent researchers play a crucial role in verifying digital content before it goes viral. Schools and universities should introduce digital literacy programs that teach students how to critically assess online information.

While AI is at the core of both the problem and the solution, human vigilance remains essential. By combining technology, regulation, and awareness, society can mitigate the negative effects of deepfakes while harnessing their potential for creative and ethical applications.